Responsible AI vs Data Ethics vs Responsible Innovation

Update (June 16, 2023): This post has been updated to use ‘Responsible AI’ instead of ‘Ethical AI’ or ‘AI Ethics’, which were originally used to avoid a headache, so to speak.

Similar terms. Different meaning? If you ask a dozen practitioners in this space, you’re likely to get more than a dozen answers.

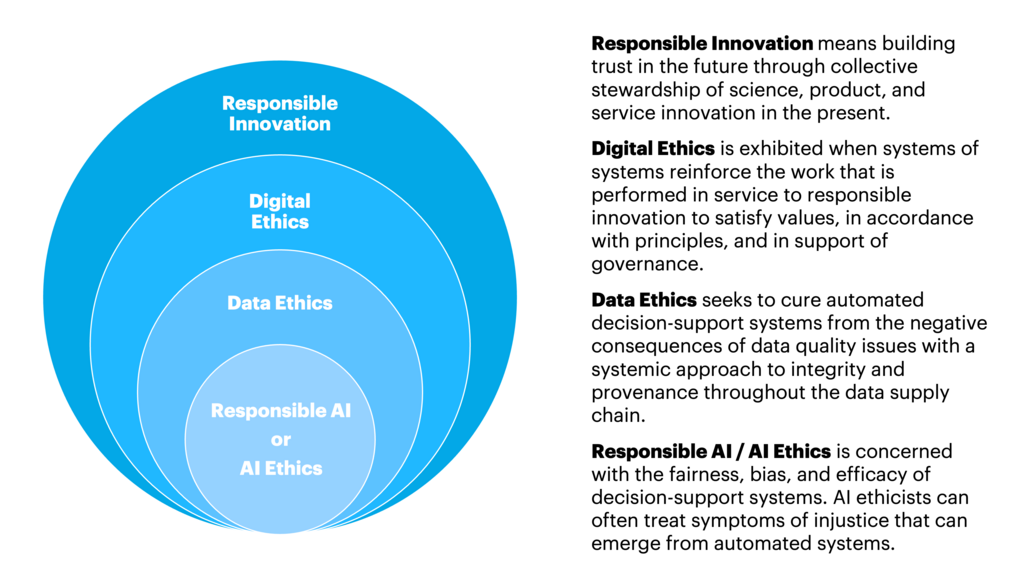

These terms are getting at the same thing, but often with different scopes of concern. The way I see it makes it fairly black and white – for me. In a nutshell, Responsible AI (also called AI Ethics sometimes) is akin to treating the symptoms of a disease and Data Ethics is like curing the disease.

That might sound provocative, but this is where it gets nuanced. For starters, both of these terms are evolving. When I formed my perspective, those practicing “Responsible AI” were pretty much exclusively concerned with bias and fairness – in data, algorithms, and models.

First, let’s get clear on what I mean by “AI.” In this context, I’m talking about the vast majority of Artificial Intelligence today – machine learning (ML). This is when we assemble a massive amount of data – it can be “labeled” or not – and use that data to “train” a model. The “trainer,” if you will, is the algorithm. So, data goes in one end of an algorithm and a “model” comes out the other end. Models output some information or action that is “learned” from all the data the model was trained on; when most people say “AI,” they mean “model.”

In this context, Responsible AI has three entry points for intervention: training data, algorithm selection, and auditing model performance in both test and production environments. Again, the focus is on minimizing bias, often to achieve fairness, and in some cases, introducing bias to achieve fairness. This is role-based ethics for those who work with data and algorithms to build models; these are often data scientists.

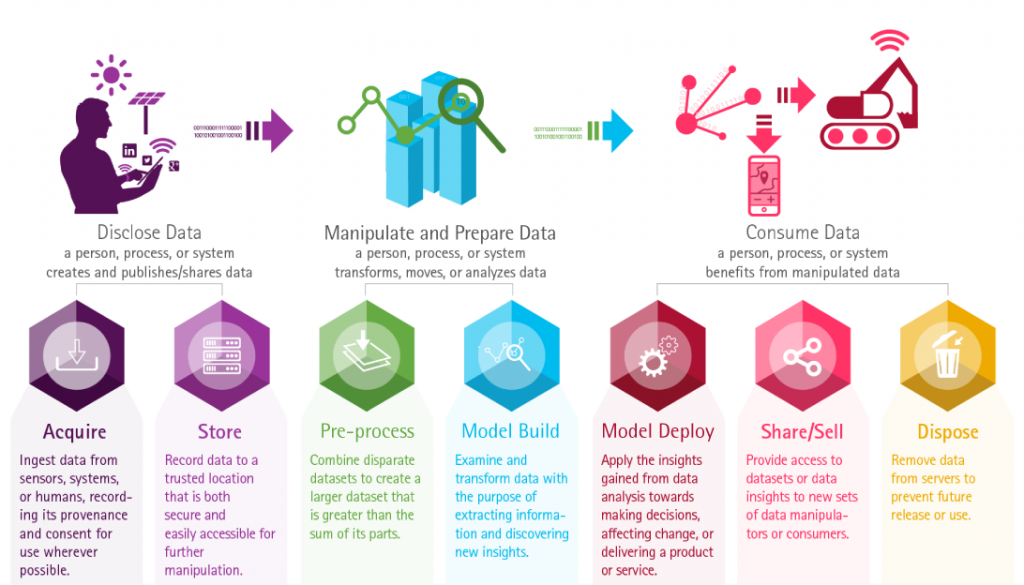

From my perspective, this is very important work, and a component of a larger portfolio of interventions. “Data Ethics,” on the other hand, is concerned with interventions throughout the data supply chain – from data ingest (creation of data from sensors and ingestion from existing data, systems, or humans), storage, data aggregation and pre-processing, analysis, model building, model deployment / use, sharing/selling the data, and disposal.

While Responsible AI deals with the middle part of the data supply chain, pre-processing to sharing, Data Ethics deals with interventions and best practices across the entire data supply chain. For instance, Responsible AI is generally not concerned with the context of consent, the culture and processes by which data is ‘created’ and cared for, the ‘chain of custody’ or ‘provenance,’ the methods of recourse offered and the extent to which agency is maximized for those subjected to the technology, or even the conversations that teams might have to build more responsible products and services.

In fairness, Data Ethics does not address this full spectrum either, but it goes further than Responsible AI at addressing root causes. For example, when individuals who might be entering data in the field don’t have a connection to what happens to that data later in its lifecycle, how it might inform significant decisions, organizations can often suffer from a ‘garbage-in, garbage-out’ problem. Often, this cultural failure to have those entering data do so with sufficient care and consistency is not in-scope for Responsible AI, while it is in-scope for Data Ethics because it’s an issue with data provenance.

Going beyond Data Ethics, Digital Ethics deals with how organizations use data as part of post-digital transformation. Take the 2015 Volkswagen emissions scandal; this wasn’t as much a lapse in data ethics as it was a lapse in digital ethics – a lapse in how an organization manipulates information to achieve an outcome that would not be possible in an analog world. In two days, Volkswagen lost a third (approximately $25 Billion) of its market capitalization when it was found to be cheating emissions tests. This lapse in digital ethics by Volkswagen exposed the brand to unprecedented risk. As a direct result the value of used vehicles affected by the scandal dropped an average of 13%, or roughly $1,700, just three weeks after the scandal broke, according to Kelly Bluebook.

Extending beyond Digital Ethics and inclusive of all interventions, responsibilities, and culture, is “Responsible Innovation.” This seems to be the term industry leaders have settled on that includes everything from training and auditing, to engineering and data science, and ultimately to governance and culture.

Whether it’s practicing Responsible AI, Data Ethics, Digital Ethics, or Responsible Innovation, chances are that the attention to raising the ethical bar will improve the way products and services are developed, improve inclusive access, provide for more equitable outcomes, and improve trust in a brand. Once practicing any of these – and Responsible AI is often the starting point – organizations with commitment from the top will find their way toward the Responsible Innovation end of the spectrum.